Image by Louis Rosenberg w/Midjourney

The Metaverse: from Marketing to Mind Control

Louis Rosenberg, PhD

The United Nations Human Rights Council recently adopted a draft resolution entitled Neurotechnology and Human Rights [1]. It’s aimed at protecting humanity from devices that can “record, interfere, or modify brain activity.” To describe the risks, the resolution uses euphemistic phrases like cognitive engineering, mental privacy and cognitive liberty, but what we’re really talking about is mind control.

I applaud the U.N. for taking up the issue of mind control, but neurotechnology is not our greatest threat on this front. That’s because it involves sophisticated hardware ranging from “brain implants” to wearable devices that can detect and transmit signals through the skull. Yes, these technologies could be very dangerous, but they’re unlikely to be deployed at scale anytime soon. In addition, individuals who submit themselves to implants or brain stimulation, will likely do so with informed consent.

On the other hand, there is an emerging category of products and technologies that could threaten our cognitive liberties across large populations, requiring nothing more than consumer-grade hardware and software from trusted corporations. These seemingly innocent systems, which will be marketed for a wide range of positive applications from entertainment to education, targeting both kids and adults, could be dangerously misused and abused without our knowledge or consent.

I’m talking about the metaverse.

Unless regulated, the metaverse could become the most dangerous tool of persuasion ever created. To raise awareness about the issues, I’ve written many articles about the hidden dangers and the pressing need to protect human rights [2], but I have not explained from a technical perspective, why immersive technologies could be as dangerous to our cognitive liberties as brain implants. To do so, I’d like to introduce a basic engineering concept called Feedback Control.

It comes from a technical discipline called Control Theory, which is the method used by engineers to control the behaviors of a system. Think of the thermostat in your house. You set a temperature goal and if your house falls below that goal, the heat turns on. If your house gets too hot, it turns off. When working properly, it keeps your house close to the goal you set. That’s feedback control.

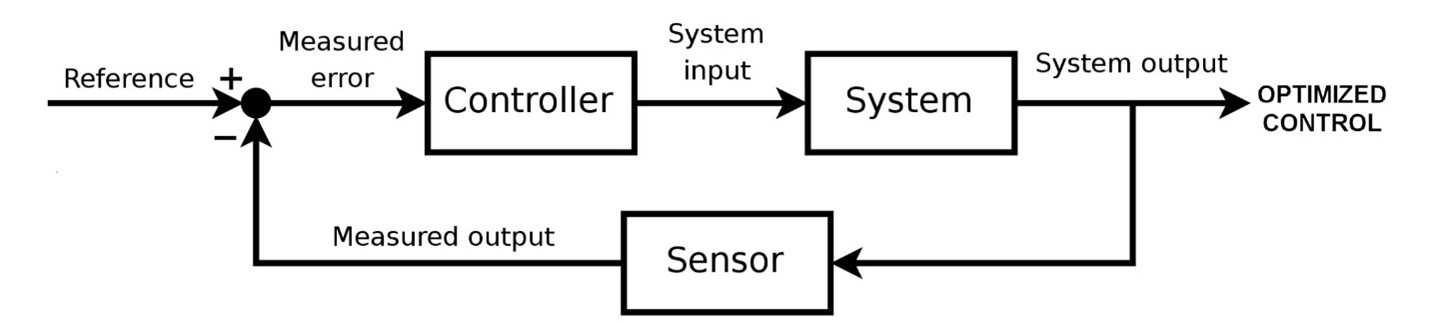

Of course, engineers like to make things more complex than they need to, so the simple concept above is generally represented in a standard format called a Control System Diagram as follows [6]:

Generic “Control System” Diagram – (credit) Wikipedia

In the heating example, your house would be the SYSTEM, a thermometer would be the SENSOR, and the thermostat would be the CONTROLLER. An input signal called the Reference is the temperature you set as the goal. The goal is compared to the actual temperature in your house (i.e., Measured Output). The difference between the goal and measured temperature is fed into the thermostat which determines what the heater should do. If the house is too cold, its heater turns on. If it’s too hot its heater turns off. That’s a classic control system.

Of course, control systems can get very sophisticated, enabling airplanes to fly on autopilot and cars to drive themselves, even allowing robotic rovers to land on mars. These systems need sophisticated sensors to detect driving conditions or flying conditions or whatever else is appropriate for the task. These systems also need powerful controllers to process the sensor data and influence system behaviors in subtle ways. These days, the controllers increasingly use AI algorithms at their core.

With that background, let’s jump back into the metaverse.

If we strip away the hype, the metaverse is about transforming how we humans interact with the digital world. Today we mostly consume flat media viewed in the third person. In the metaverse, digital content will become immersive experiences that are spatially presented all around us. This shift to first-person interactions will deeply alter our relationship with digital information, changing us from outsiders peering in to participants engaging content presented naturally in our surroundings. Thirty years ago I described the potential like this [3] – “Given the ability to draw upon our highly evolved human facilities, users of virtual environment systems can obtain an intimate level of insight and understanding.”

I still believe those words, but the power of immersive media can work in both directions. Used in positive ways, it can unlock insight and understanding. Used in negative ways, it could unleash the most powerful tool of persuasion and manipulation we’ve ever created [4]. That’s because immersive media can be far more impactful than traditional media, targeting our perceptual channels in the most personal and visceral form possible – as experiences [5]. And because we humans evolved to trust our senses (i.e. believe our eyes and ears), the very notion that what we can see, hear, and feel things directly around us that are entirely fabricated is not a situation we’re mentally prepared for.

And yet, that’s not the most dangerous aspect of the metaverse.

To appreciate the true danger of immersive technologies, we can use the basics of Control Theory. Referring back to the standard diagram above, we see that only a few elements are needed to effectively control a system, whether it’s a simple nightlight or a sophisticated robot. The two most important elements are a SENSOR to detect the system’s real-time behaviors, and a CONTROLLER that can influence those behaviors. The only other elements needed are the feedback loops that continually detect behaviors and impart influences, guiding the system towards desired goals.

Referring back to the standard diagram above, we see that only a few elements are needed to effectively control a system, whether it’s a simple thermostat or a sophisticated robot. The two most important elements are a SENSOR to detect the system’s real-time behaviors, and a CONTROLLER that can influence those behaviors. The only other elements needed are the feedback loops that continually detect behaviors and impart influences, guiding the system towards desired goals.

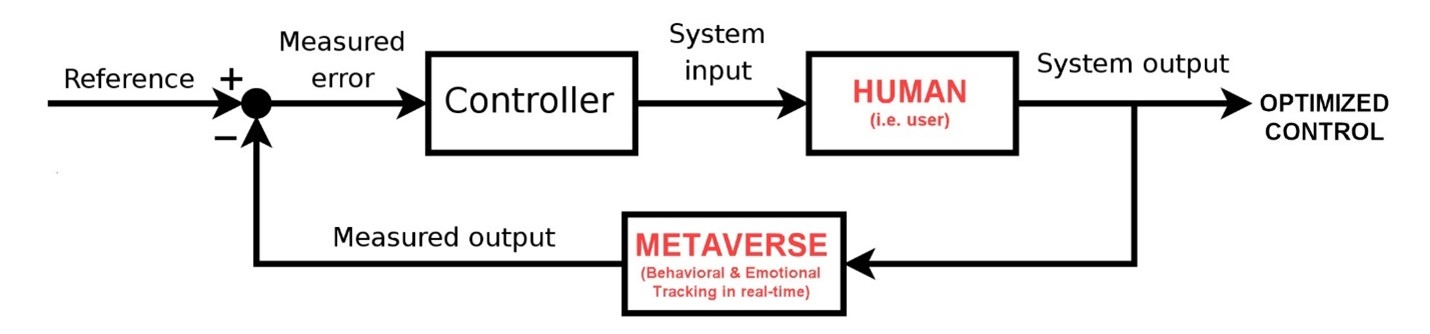

As you may have guessed, when considering the danger of the metaverse, the system being controlled is you – the human in the loop. After all, when you put on a headset and sink into the metaverse, you’re immersing yourself in an environment that has the potential to act upon you more than you act upon it. Said another way, you become an inhabitant of an artificial world run by a third party that can monitor and influence your behaviors in real time. That’s a very dangerous situation.

In the figure above, System Input to the human user are the immersive sights, sounds, and touch sensations that are fed into your eyes, ears, hands, and body. This is overwhelming input – possibly the most extensive and intimate input we could imagine other than using surgical brain implants. This means the ability to influence the system (i.e. you) is equally extensive and intimate. On the other side of the user in the diagram above is the System Output – that’s your actions and reactions.

This brings us to the SENSOR box in the diagram above. In the metaverse, sensors will track everything you do in real-time – the physical motions of your head, hands, and body. That includes the direction you’re looking, how long your gaze lingers, the faint motion of your eyes, the dilation of your pupils, the changes in your posture and gait – even your vital signs are likely to be tracked in the metaverse including your heartrate, respiration rate and blood pressure.

For example, a recent headset deployed by META can accurately track your facial expressions and eye motions [7]. The potential goes beyond merely sensing the expressions that other people notice, but also includes subconscious expressions that are too fast or subtle for human observers to recognize. Known as “micro-expressions,” these events can convey emotions that users had not intended to express and are unaware of revealing [9]. Users may not even be aware of feeling the emotions, leading to situations where the system literally knows the user better than he or she knows himself.

In addition, AI technology can be used to infer deeply personal information that is not directly detected by sensors. In a recent paper, researchers at META showed that when processing “sparse data” from just a few sensors on your head and hands, AI technology could accurately predict the position, posture, and motion of the rest of your body [8]. Other researchers have shown that body motions such as gait can be used to infer a range of medical conditions from depression to dementia [10,11].

In addition to tracking basic physical information, metaverse technology already exists to infer your emotions in real-time from your facial expressions, vocal inflections, gestures and body posture. Other technologies exist to detect emotions from the blood-flow patterns on your face and the vital signs detected from sensors in your earbuds [12]. This means when you immerse yourself into the metaverse, the sensor in the system diagram above will be able to track almost everything you do and say in that world and accurately predict how you feel during each action, reaction, and interaction.

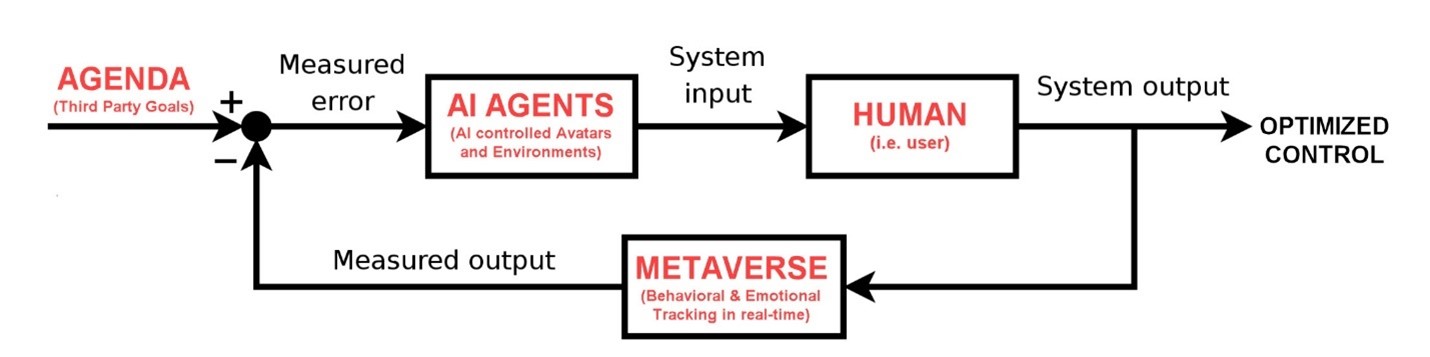

Control System Diagram for Metaverse Environments

In addition, the behavioral and emotional data described above could be stored by metaverse platforms over time. When processed by AI algorithms, this data could be turned into behavioral and emotional models that enable platforms to accurately predict how users will react when presented with target stimuli (i.e. System Input) from a controller. And because the metaverse is not just virtual reality but also augmented reality, the tracking, storing, and profiling of users will occur not just in fully simulated worlds but within the real-world embellished with virtual content. In other words, metaverse platforms will be able to track and profile behaviors and emotions throughout our daily life [13].

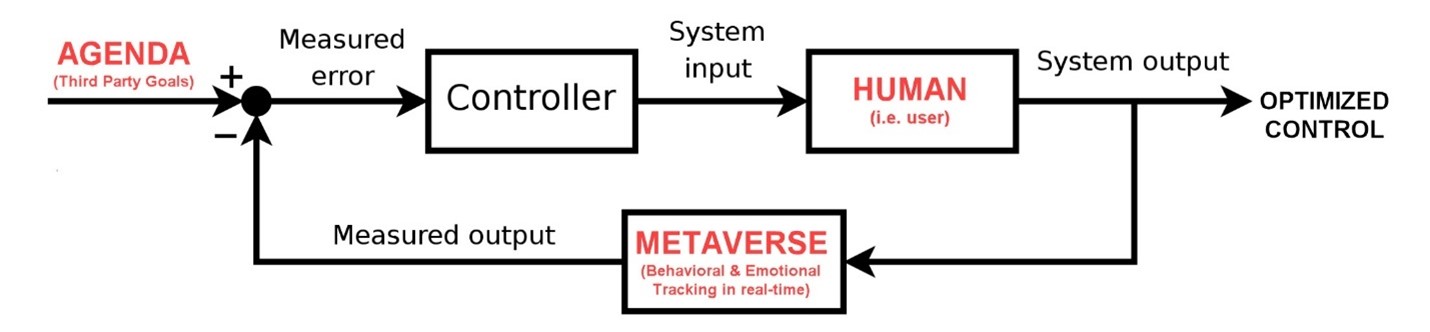

Of course, the danger is not simply that platforms can track and profile the behaviors and emotions of users, it’s what they can do with that data. This brings us to the CONTROLLER box in the diagram above. The controller receives a Measured Error, which is the difference between a Reference Goal (a desired behavior) and the Measured Output (a sensed behavior). If metaverse platforms are allowed to adopt and deploy similar business models as those used by social media, the Reference Goal could easily be the AGENDA of third parties that aim to impart influence over users (see diagram below). The third party could be a paying sponsor (or state actor) that desires to persuade a user to buy a product or service, or to believe a piece of propaganda, ideology, or misinformation.

Control System Diagram with Third Party Agenda

Of course, advertising and propaganda have been around forever and can be quite impactful when deployed using traditional marketing techniques. What’s unique about the metaverse is the ability to create high-speed feedback loops in which user behaviors and emotions are continuously fed into a controller that can adapt its influence in real-time to optimize persuasion. This process can easily cross the line from marketing to manipulation. To appreciate the risks, let’s dig into the controller.

At its core, the CONTROLLER aims to “reduce the error” between the desired behavior of a system and the measured behavior of the system. It does this by imparting System Input as shown on the diagram above as an innocent looking arrow. In the metaverse, this innocent arrow represents the ability of platforms to modify the virtual or augmented environment the user is immersed within.

In other words, in an unregulated metaverse, the controller can alter the world around the user, modifying what they see and hear and feel in order to drive that user towards the desired goal. And because the controller can monitor the user in real-time, it will be able to continually adjust its tactics, optimizing the persuasive impact, moment by moment, just like a thermostat optimizes the temperature of a house.

To make this clear, let’s give some examples:

Imagine a user sitting in a coffee house in the metaverse (virtual or augmented). A third party sponsor wants to inspire that user to buy a particular product or service, or believe a piece of messaging, propaganda, or misinformation. In the metaverse, advertising will not be the pop-up ads and videos that we’re familiar with today but will be immersive experiences that are seamlessly integrated into our surroundings [14]. In this particular example, the controller creates a virtual couple sitting at the next table. That virtual couple will be the System Input that is used to influence the user.

First, the controller will design the virtual couple for maximum impact. This means the age, gender, ethnicity, clothing styles, speaking styles, mannerisms, and other qualities of the couple will be selected by AI algorithms to be optimally persuasive upon the target user based on that user’s historical profile. Next, the couple will engage in an AI-controlled conversation amongst themselves that is within earshot of the target user. That conversation could be about a car that the target user is considering purchasing, possibly framed as the virtual couple discussing how happy they are with their own recent purchase.

As the conversation begins, the controller monitors the user in real-time, assessing micro-expressions, body language, eye motions, pupil dilation, and blood pressure to detect when the user begins paying attention. This could be as simple as detecting a subtle physiological change in the user correlated with comments made by the virtual couple. Once engaged, the controller will modify the conversational elements to increase engagement. For example, if the user’s attention increases as the couple talks about the car’s horsepower, the conversation will adapt in real-time to focus on performance.

As the overheard conversation continues, the user may be unaware that he or she has become a silent participant, responding through subconscious micro-expressions, body posture, and changes in vital signs. The AI controller will highlight elements of the product that the target user responds most positively to and will provide conversational counterarguments when the user’s reactions are negative. And because the user does not overtly express objections, the counterarguments could be profoundly influential. After all, the virtual couple could verbally address emerging concerns before those concerns have fully surfaced in the mind of the target user. This is not marketing, it’s manipulation.

And in an unregulated metaverse, the target user may believe the virtual couple are avatars controlled by other patrons. In other words, the target user could easily believe they are overhearing an authentic conversation among users and not realize it’s a promotionally altered experience that was targeted specifically at them, injected into their surroundings to achieve a particular agenda [15].

And that’s a relatively benign example. Instead of pushing the features of a new car or toy, the third-party agenda could be to influence the target user about a political ideology, extremist propaganda, or outright misinformation or disinformation. In addition, the example above targets the user as a passive observer of a promotional experience in his or her metaverse surroundings. In more aggressive examples, the controller will actively engage the user in targeted promotional experiences.

For example, consider the situation in which an AI-controlled avatar that looks and sounds like any other user in an environment engages the target user in agenda-driven promotional conversation. In an unregulated metaverse, the user may be entirely unaware that he or she has been approached by a targeted advertisement, but instead might believe he or she is in a conversation with another user. The conversation could start out very casual but could aim towards a prescribed agenda.

In addition, the controller will likely have access to a wealth of data about the target user, including their interests, values, hobbies, education, political affiliation, etc. – and will use this to craft dialog that optimizes engagement. In addition, the controller will have access to real-time information about the user, including facial expressions, vocal inflections, body posture, eye motions, pupil dilation, facial blood patterns, and potentially blood pressure, heartrate, and respiration rate. The controller will adjust its conversational tactics in real-time based on the overt verbal responses of the target user in combination with subtle and potentially subconscious micro-expressions and vital signs.

It is well known that AI systems can outplay the best human competitors at chess, go, poker, and a wealth of other games of strategy. From that perspective, what chance does an average consumer have when engaged in promotional conversation with an AI agent that has access to that user’s personal background and interests, and can adapt its conversational tactics in real-time based on subtle changes in pupil dilation in blood pressure? The potential for violating a user’s cognitive liberty through this type of feedback-control in the metaverse is so significant it likely borders on outright mind control.

To complete the diagram for metaverse-based feedback control, we can replace the generic word controller with AI-based software that alters the environment or injects conversational avatars that impart optimized influence on target users. This is expressed using the phrase AI AGENTS below.

Rosenberg’s Scenario for Metaverse-based Mind Control

As expressed in the paragraphs above, the public should be aware that large metaverse platforms could be used to create feedback-control systems that monitor their behaviors and emotions in real-time and employ AI agents to modify their immersive experiences to maximize persuasion. This means that large and powerful platforms could track billions of people and impart influence on any one of them by altering the world around them in targeted and adaptive ways.

This scenario is frightening but not farfetched.

In fact, it could be the closest thing to “playing god” that any mainstream technology has ever achieved. That’s a bold statement and I don’t make it lightly. I’ve been in this field for over 30 years, starting as a researcher at Stanford, NASA, and the US Air Force and then founding a number of successful companies in this space [16]. I genuinely believe that the metaverse can be a positive technology for humanity. But if we don’t protect against the downsides by crafting thoughtful regulation, it could challenge our most sacred personal freedoms including our basic capacity for free will [17-19].

REFERENCES

[1] United Nations. (n.d.). A/HRC/51/L.3 vote item 3 – 40th meeting, 51st Regular Session Human Rights Council | UN web TV. United Nations. https://media.un.org/en/asset/k1d/k1dcro5var

[2] Louis Rosenberg. 2022. Regulation of the Metaverse: A Roadmap: The risks and regulatory solutions for largescale consumer platforms. In Proceedings of the 6th International Conference on Virtual and Augmented Reality Simulations (ICVARS ’22). Association for Computing Machinery, New York, NY, USA, 21–26. https://doi.org/10.1145/3546607.3546611

[3] Rosenberg Louis Barry (1994) “Virtual Fixtures : Perceptual Overlays Enhance Operator Performance in Telepresence Tasks.” Stanford University U.M.I 19971994.

[4] Robertson, D. (2022, September 14). The most dangerous tool of persuasion. POLITICO. https://www.politico.com/newsletters/digital-future-daily/2022/09/14/metaverse-most-dangerous-tool-persuasion-00056681

[5] Breves, Priska. “Biased by Being There: The Persuasive Impact of Spatial Presence on Cognitive Processing.” Computers in Human Behavior, vol. 119, 2021, p. 106723., https://doi.org/10.1016/j.chb.2021.106723

[6] Wikimedia Foundation. (2022, September 19). Control theory. Wikipedia. Retrieved October 18, 2022, from https://en.wikipedia.org/wiki/Control_theory

[7] Johnson, K. (2022, October 13). Metas VR Headset Harvests Personal Data Right Off Your Face. WIRED. https://www.wired.com/story/metas-vr-headset-quest-pro-personal-data-face/

[8] Winkler, A. (2022, September 20). QuestSim: Human Motion Tracking from Sparse Sensors with Simulated Avatars. https://arxiv.org/abs/2209.09391

[9] Li, Xiaobai & Hong, Xiaopeng & Moilanen, Antti & Huang, Xiaohua & Pfister, Tomas & Zhao, Guoying & Pietikainen, Matti. (2017). Towards Reading Hidden Emotions: A Comparative Study of Spontaneous Micro-Expression Spotting and Recognition Methods. IEEE Transactions on Affective Computing. PP. 1-1. 10.1109/TAFFC.2017.2667642.

[10] Wang Y, Wang J, Liu X, Zhu T. Detecting Depression Through Gait Data: Examining the Contribution of Gait Features in Recognizing Depression. Front Psychiatry. 2021 May 7;12:661213. doi: 10.3389/fpsyt.2021.661213. PMID: 34025483; PMCID: PMC8138135.

[11] Jacobs, S. (2022, October 12). Abnormality of Gait as a Predictor of Non-Alzheimer’s Dementia. New England Journal of Medicine. https://www.nejm.org/doi/full/10.1056/NEJMoa020441

[12] Benitez-Quiroz CF, Srinivasan R, Martinez AM. Facial color is an efficient mechanism to visually transmit emotion. Proc Natl Acad Sci U S A. 2018 Apr 3;115(14):3581-3586. doi: 10.1073/pnas.1716084115. Epub 2018 Mar 19. PMID: 29555780; PMCID: PMC5889636.

[13] Rosenberg, L. (2022, August 30). How a parachute accident helped jump-start augmented reality. IEEE Spectrum. Retrieved October 1, 2022, from https://spectrum.ieee.org/history-of-augmented-reality

[14] Rosenberg, L (2022) Marketing in the Metaverse: A fundamental shift., Future of Marketing Institute. DOI: 10.13140/RG.2.2.35340.80003

[15] Rosenberg, L. (2022, August 21). Deception vs authenticity: Why the metaverse will change marketing forever. VentureBeat. https://venturebeat.com/ai/deception-vs-authenticity-why-the-metaverse-will-change-marketing-forever/

[16] Rosenberg, L.B. (2022). Augmented Reality: Reflections at Thirty Years. In: Arai, K. (eds) Proceedings of the Future Technologies Conference (FTC) 2021, Volume 1. FTC 2021. Lecture Notes in Networks and Systems, vol 358. Springer, Cham. https://doi.org/10.1007/978-3-030-89906-6_1

[17] Rosenberg, L. (2022, September 19). The case for demanding “immersive rights” in the metaverse. Big Think. https://bigthink.com/the-future/immersive-rights-metaverse/

[18] The XRSI Privacy and Safety Framework – XRSI – XR Safety Initiative. XRSI. (2022, February 16). Retrieved October 18, 2022, from https://xrsi.org/publication/the-xrsi-privacy-framework

[19] Rosenberg, L.B. (2022). Regulating the Metaverse, a Blueprint for the Future. In: De Paolis, L.T., Arpaia, P., Sacco, M. (eds) Extended Reality. XR Salento 2022. Lecture Notes in Computer Science, vol 13445. Springer, Cham. https://doi.org/10.1007/978-3-031-15546-8_23

About Author:

Dr. Louis Rosenberg is a pioneer of virtual and augmented reality. His work began over thirty years ago in labs at Stanford and NASA. In 1992 he developed the first interactive augmented reality (mixed reality) system at Air Force Research Laboratory (AFRL). In 1993 he founded the early Virtual Reality company Immersion Corporation. In 2004 he founded the early Augmented Reality company Outland Research. He’s been awarded over 300 patents for VR, AR, and AI technologies and published over 100 academic papers. He received his PhD from Stanford University and was a tenured professor at California State University (Cal Poly). He is currently CEO of Unanimous AI, the Chief Scientist of the Responsible Metaverse Alliance, the Global Technology Advisor to the XR Safety Initiative (XRSI) and a technology advisor to the Future of Marketing Institute (FMI).